Neuromorphic computing is a powerful tool for identifying time-varying patterns, but is often less effective than some AI-based techniques for more complex tasks. Researchers at the iCAS Lab directed by Ramtin Zand at the University of South Carolina, work on an NSF CAREER project to show how the capabilities of neuromorphic systems could be improved by blending them with specialized machine learning systems, without sacrificing their impressive energy efficiency. Using their approach, the team aims to show how the gestures of American Sign Language could be instantly translated into written and spoken language. More

Artificial intelligence is playing an increasingly important role in our everyday lives, and as its use expands, there is now a growing need for everyday hardware to become smarter, faster, and more efficient. In particular, small devices including smartphones and wearable technologies are being used to perform more and more complex tasks, often in real time.

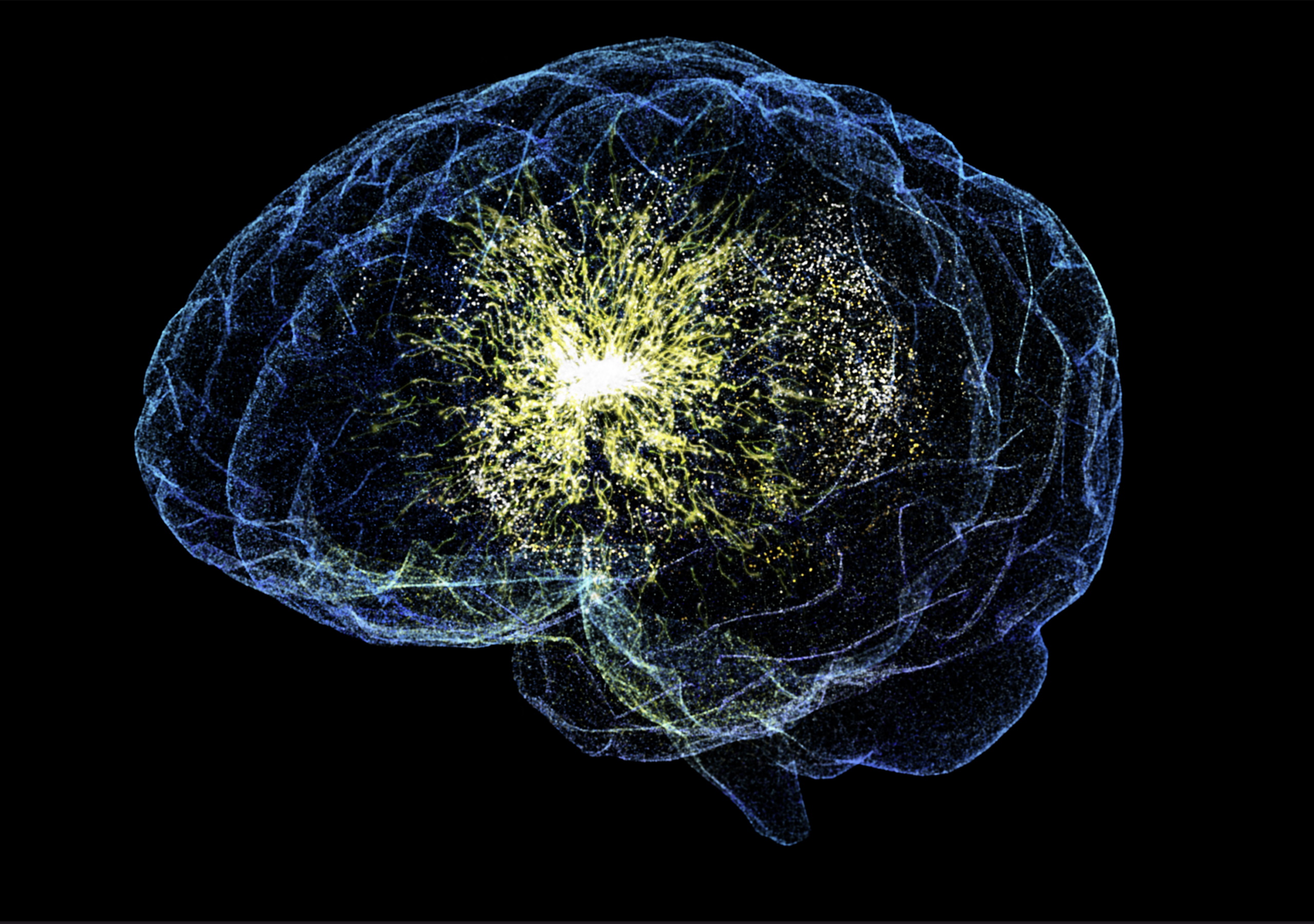

To keep pace with this growing demand, many researchers are focused on the possibilities of ‘neuromorphic computing’: an approach inspired by the inner workings of the human brain. By mimicking the function of our deeply interconnected neural circuits, these systems can enable computers to process multiple tasks at once, allowing them to operate far more quickly and efficiently.

Neuromorphic computing is especially powerful when used in tandem with specialised cameras named dynamic vision sensors, or DVSs. Unlike regular cameras, DVSs only focus on the parts of an image that are moving, while any still parts are automatically filtered out. At their most basic level, neuromorphic systems can keep track of these movements, allowing them to identify time-varying patterns which may be extremely complex.

Yet despite their advantages, neuromorphic systems can still be outperformed by more conventional machine learning systems. Machine learning is a branch of AI which allows computers to learn from their experience of real data: helping them to improve their predictions over time. For tasks such as translation, question-answering, and object identification, this makes them faster and more accurate.

But compared with neuromorphic computing, machine learning systems often require larger, more complex models, making them too energy-intensive to be run by smartphones, wearable technologies, or other compact devices.

Through their research, Ramtin Zand and his team at the iCAS Lab, show how this challenge could be overcome by blending neuromorphic computing with machine learning techniques: creating hybrid systems which don’t sacrifice either the efficiency of neuromorphic systems, or the speed and accuracy of machine learning.

The iCAS team’s decision to study sign language translation in the NSF CAREER project has a deep significance for millions of people around the world. For many people who are deaf or hard-of-hearing, systems such as American Sign Language, or ASL, are a vital tool for communication. However, they can only be understood by a limited community, making it extremely difficult for the deaf and hard-of-hearing to communicate with the wider world.

To address this challenge, some AI-driven tools have now begun to appear which can translate ASL into written and spoken language in real time. However, to convert hand and arm movements into language, these tools don’t just need to recognise these gestures – they also need to understand the meanings behind each movement, and put them into context.

While many of these tools are now highly effective, they still often struggle to operate quickly enough to translate users’ hand and arm movements in real time. On top of this, the complexity of these systems makes them too power-hungry for small devices such as smartphones or wearable technologies.

Through their approach, the iCAS team considered how existing ASL translation tools could be blended with neuromorphic systems to make them faster, more accurate, and more efficient overall.

In their system, a DVS camera is first used to capture a user’s hand and arm movements, without recording unnecessary background information. Straight away, this allows the system to process movements more quickly, while consuming less power.

At this stage, neuromorphic computing is used for the initial recognition of the movements captured by the DVS camera, but doesn’t fully translate them. Instead, the identified movements are fed into another type of machine learning system, named a Transformer model.

These models are especially good at understanding language, since they can detect and interpret patterns in complex sequences – but would use up substantial amounts of power to recognise movements in the first place.

By allowing neuromorphic computing to handle this first part of the process, Zand’s team can therefore blend these two technologies together into a layered translation process: translating ASL gestures using far less power than a Transformer model operating by itself, without sacrificing speed or accuracy.

With these powerful capabilities, the researchers plan to deploy their layered system onto a tiny computer, which could be easily integrated into wearable devices such as smartwatches and augmented reality glasses. With the help of these technologies, they hope that a person using ASL could soon be able to hold a seamless conversation with someone who doesn’t know sign language.

Beyond its strong potential in sign language translation, the iCAS team also hope their CAREER project could hold promise for other areas that require the real-time processing of information in a tightly compact format.

In healthcare, for instance, it could support wearable monitors that keep track of patients’ vital signs, detecting any sudden changes and alerting medical staff in real time. In robotics, this technology could allow robots to respond more naturally to human movements and language cues, making them safer and more effective for tasks such as assisting the elderly or disabled in their daily lives.

For now, Zand and his team are hopeful that their research will lay a strong groundwork for future studies into how neuromorphic computing could be integrated into powerful AI systems such as Transformer models.

In their upcoming research, the iCAS team aim to focus on how their approach could be applied to other domains and applications. As hardware improves, they will also aim to take full advantage of the improving efficiency of portable devices, making their hybrid system more accessible to users around the world.